Topics

7 min read time

Video is the most abundant yet underused source of environmental intelligence. From indoor surveillance to city-scale transit feeds, human activity is increasingly captured on video. Transforming this raw footage into actionable understanding has long been a challenge, through—until now, with the emergence of vision AI.

Vision AI applies AI to extract meaning from images and videos. While early forms of AI could only classify simple objects or actions in constrained settings, today’s Vision AI pipelines can detect nuanced behavior, identify sensitive content, understand scene context, and even generate structured video knowledge, all with minimal human oversight.

This transformation was made possible by advances in:

Self-supervised pretraining on large video-text datasets (e.g., Kinetics, Ego4D)

High-performance models like ViT, CLIP, and COSMOS-Reason1 that unify visual and textual reasoning

Open-source tools (YOLO, DeepFace, Whisper) that bring state-of-the-art capabilities to developers and enterprises

Edge AI and cloud infrastructure that have democratized scalable video processing

What was once reserved for elite research labs is now a critical layer in governance-compliant AI systems across industries.

Where vision AI is already delivering value

Vision AI is already transforming high-impact domains ranging from public safety/transit to physical AI/world modeling. In each of the following applications, Vision AI serves not only as a sensor but as an interface layer that enables downstream systems like robotics, large language models (LLMs), and operational dashboards to work with interpreted, compliant, and structured video data.

Public safety/transit

From airports to subway platforms, vision AI gives operators a live feed of risk and response.

Detecting unattended bags, violent group activity, and fall incidents

Monitoring crowds for anomalies or suspicious behavior

Filtering out personally identifiable information (PII) before model training

Providing real-time alerts in metro stations and airports

These capabilities help cities and transit systems react faster and maintain public confidence.

Hospitality/indoor security

Hotels and short-term rentals are using vision AI to protect guests and improve service.

Ensuring compliance with food handling, cleaning protocols, and service standards

Identifying minor presence or NSFW situations in recorded footage (e.g., Airbnbs)

Auto-summarizing long security footage into meaningful clips for managers

The result is safer environments and streamlined oversight without constant manual monitoring.

Outdoor and perimeter monitoring

On construction sites, in warehouses, and across urban landscapes, vision AI extends human awareness.

Object tracking in warehouses, construction sites, and urban environments

Pose estimation to detect loitering, falls, or safety violations

Scene-level understanding of changing weather, lighting, or unauthorized access

By spotting risks in real time, vision AI prevents costly accidents and protects assets.

Physical AI and world modeling

Vision AI also feeds the next generation of robotics and digital twins.

Feeding annotated scenes into simulators like NVIDIA Omniverse to train robots in digital twins

Generating synthetic video with structured metadata for rare scenario training (e.g., fire evacuation)

Using vision-language grounding (e.g., COSMOS, LLaVA) to help robots “understand” indoor layouts

This data-rich foundation accelerates robot training and brings realistic world modeling to life.

Components of a responsible Vision AI pipeline

Here’s a simplified breakdown of what happens inside a well-governed, real-world video processing pipeline:

Scene captioning

Generate captions like: “Woman preparing lunch while a child plays nearby.”

Helps downstream systems understand the high-level context of video segments.

Object detection and tracking

Identify people, pets, or equipment — and track them across frames to follow behavior patterns or check for presence of forbidden items.

Audio diarization & PII detection

Understand “who spoke when,” transcribe audio, and flag private info like names or phone numbers.

Face and age detection

Detect faces, estimate age, and flag potential minorsm which is important for ethical filtering and compliance.

NSFW filtering

Flag inappropriate content using computer vision models trained on nudity and suggestive imagery.

Motion and event detection

Auto-detect idle time, high activity, or sync cues like clapping to guide what content should be kept or skipped.

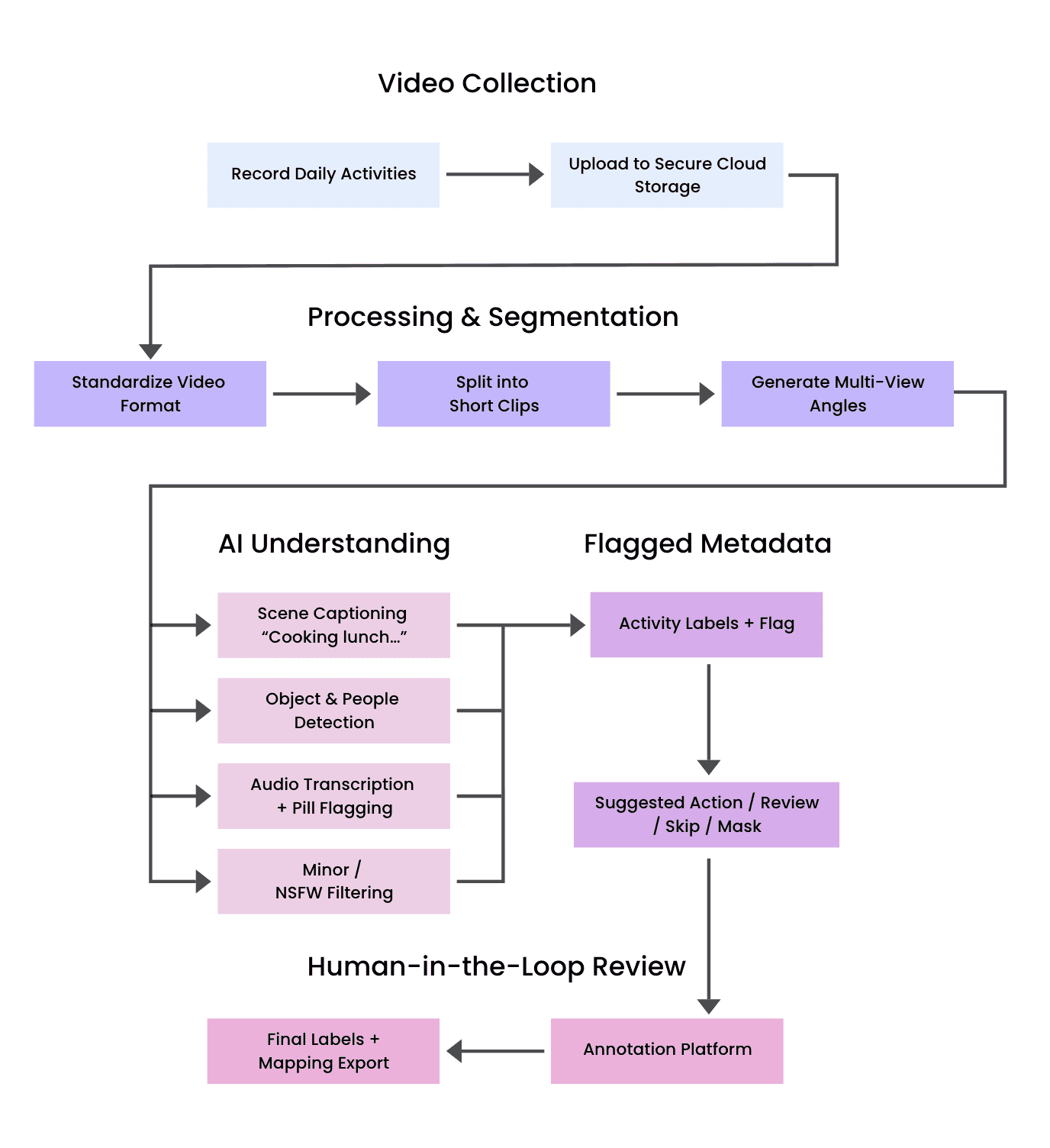

The following image depicts how Centific filters and provides clean video as a pipeline of operations.

Governance: training models safely at scale

Governance is both a policy and a practice. Training vision models on sensitive content (minors, NSFW content, private speech) without proper filtering can:

Violate privacy laws (GDPR, FERPA, HIPAA)

Introduce bias or unsafe behavior into downstream models

Pose reputational or legal risks

That’s why Centific’s pipeline pre-filters video in the following ways:

Governance risk | Detection method |

|---|---|

Minors | DeepFace age estimation + face flagging |

NSFW content | ONNX image classifiers using FalconAI taxonomy |

PII in audio | Whisper transcription + Presidio named entity detection |

Empty/idle frames | Black screen & motion analysis to auto-skip |

All of this happens before human annotators or model training — shifting the burden from compliance after the fact to governance-by-design. The impact: 31% improvement in annotation speed, or approximately 19 minutes saved per hour of video reviewed.

Scene understanding enables vision-as-knowledge

Once videos are segmented and captioned, the scene descriptions become query able nodes in a video knowledge graph. This allows:

RAG + LLM integration: Generate structured answers using GPT-style models over scene data

Federated querying: Search videos by activity type, age group, risk level, or metadata

Training data curation: Build specific datasets for behavior modeling, robotics, or accessibility AI

Each shard is not just a clip; it’s an understood event.

Advancing into physical AI: from videos to robots

Vision AI is the foundation of physical AI, which perceives, reason, and act in the real world. But physical agents need more than raw video. They need structured, diverse, privacy-safe understanding. That’s why Centific’s R&D teams are building:

Sim2Real Pipelines: Use Omniverse + SynthVision to create simulation-ready training environments for robotics, eldercare, agritech, and transit

Agentic AI Frameworks: Modular agents (SceneAgent, VideoAgent, PetAgent) that simulate environment behavior for Physical AI

Synthetic Data Generation: GANs and NeRFs to simulate rare poses, motion, and lighting with auto-labels

Governance-Ready Datasets: Age-filtered, PII-safe, scenario-tagged content for regulatory-safe training

These workflows enable:

Training robots to understand layouts (e.g., where’s the refrigerator in a kitchen?)

Simulating emergency responses (e.g., fall detection in eldercare)

Multi-agent coordination in dynamic environments (e.g., warehouses)

With several possible synthetic combinations of humans, homes, lighting, and activities, Vision AI is the bridge to intelligent agents that navigate the real world safely and intelligently.

Vision AI as the interface to the physical world

To make Vision AI safe, compliant, and powerful, we must:

Understand scenes, not just frames

Govern sensitive content before training

Bridge annotated real video to simulated environments

Centific’s pipeline and R&D platforms like SynthVision and Cosmos Reasoning offer a scalable, governance-first pathway toward the next frontier: physical AI, where agents not only see and think, but act in the world responsibly.

Want to integrate governance-compliant Vision AI into your annotation or robotics pipeline? Contact us to explore how.

References and model bibliography

Scene Captioning

Cosmos-Reason1 (NVIDIA) - Azzolini, A., Brandon, H., Chattopadhyay, P., et al. (2025). From Physical Common Sense to Embodied Reasoning. arXiv. https://arxiv.org/abs/2505.00001

Object Detection & Tracking

YOLOv11 / YOLOv7 - Wang, C.-Y., Bochkovskiy, A., Liao, H.-Y. M. (2022). YOLOv7: SOTA for Real-Time Detectors. arXiv. https://arxiv.org/abs/2207.02696

BoT-SORT - Aharon, N., Orfaig, R., Bobrovsky, B.-Z. (2022). Robust Multi-Pedestrian Tracking. arXiv. https://arxiv.org/abs/2206.14651

Audio Diarization & PII Detection

pyannote.audio - Bredin, H., Yin, R., Coria, J. M., et al. (2023). Neural Building Blocks for Speaker Diarization. INTERSPEECH. https://huggingface.co/pyannote/speaker-diarization

Whisper - Radford, A., Kim, J. W., Xu, T., et al. (2022). Robust Speech Recognition via Large-Scale Weak Supervision. arXiv. https://arxiv.org/abs/2212.04356

Presidio (PII Detection) - Microsoft Presidio. (2024). Benchmarking Text Anonymization Models. arXiv. https://arxiv.org/abs/2401.00072

Face & Age Detection

DeepFace / LightFace - Serengil, S. I., Ozpinar, A. (2020). LightFace: A Hybrid Deep Face Recognition Framework. ASYU. https://github.com/serengil/deepface

DEX: Deep Expectation of Age Rothe, R., Timofte, R., Van Gool, L. (2015). Apparent Age Estimation from a Single Image. ICCV Workshops. https://data.vision.ee.ethz.ch/cvl/rrothe/imdb-wiki/

NSFW Classification

FalconsAI ONNX Model - Hugging Face. onnx-community/nsfw_image_detection. https://huggingface.co/onnx-community/nsfw_image_detection

Yahoo NSFW Classifier - Mahadeokar, J., Pesavento, G. (2016). Open-Sourcing a Deep Learning Solution for Detecting NSFW Images. https://yahooeng.tumblr.com/post/151148689421

Are your ready to get

modular

AI solutions delivered?

Connect data, models, and people — in one enterprise-ready platform.

Latest Insights

Connect with Centific

Updates from the frontier of AI data.

Receive updates on platform improvements, new workflows, evaluation capabilities, data quality enhancements, and best practices for enterprise AI teams.