Access our specialized experts who provide comprehensive guidance, perform assessments, and develop key business strategies to identify and resolve potential biases, privacy concerns, and fairness issues in a variety of AI solutions. We work closely with clients to establish and deliver robust ethical frameworks, implement best practices, and adhere to all regulatory requirements, fostering trust and driving responsible AI innovation.

Our expertise in data services ranges from validation to refinement, ensuring an all-inclusive review of your data needs for ethical and safe AI execution. We also apply reinforcement learning from human feedback (RLHF) that is accomplished through our global talent pool of 1M+ experts combined with our AI-based techniques to guarantee your models will improve significantly over time.

Chatbots and GenAI have become part of our daily lives thanks to large language models (LLMs), so ensuring your applications have robust safeguards to prevent harmful behaviors is a critical mission. Our Al red teaming experts have refined prompt hacking techniques and frameworks to keep your model safe and secure.

Our benchmarking services deliver performance assessments of various GenAI models available for specific business cases. This is done by creating custom evaluation datasets that help us select the appropriate models for what each client is trying to achieve though their AI initiatives.

Enhancing the resilience of machine learning models against deliberate attacks or perturbations

Easily understanding and interpreting decisions or predictions from AI models

Ensuring clarity of overall model development and deployment processes

Avoidance of bias or discrimination in ML models, ensuring equitable treatment and outcomes across different groups or individuals

Safeguarding sensitive or personal information contained within the data used for training or making predictions

Ensuring accuracy, speed, resource utilization, and other relevant metrics are leveraged to define success

Achieving a high degree of certainty or trust in predictions or decisions

Recreating and validating ML experiments and results quickly and easily

Performing well with unseen data, ensuring reliable and unbiased predictions across diverse contexts

Avoiding the possibility of any negative impact on humans and our environment

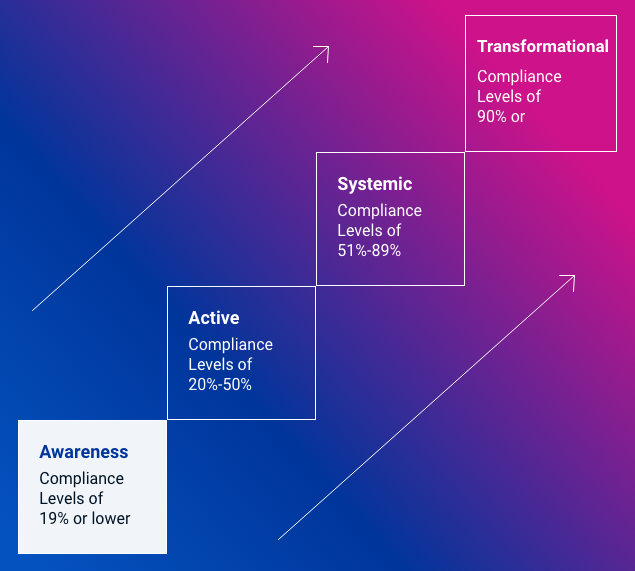

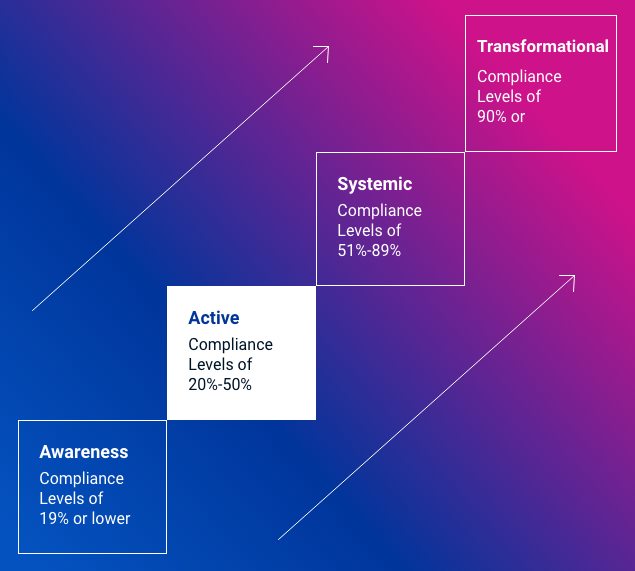

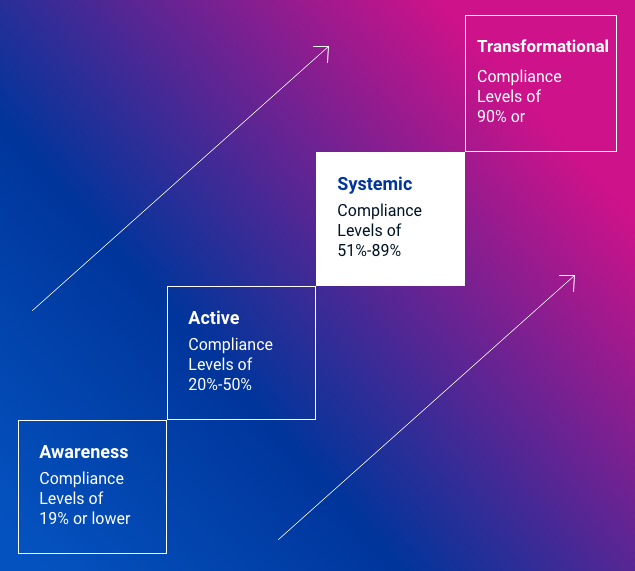

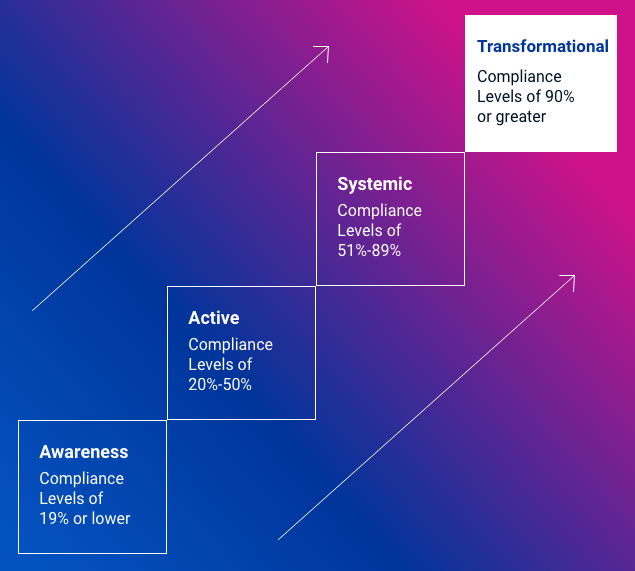

In this initial stage, organizations have an AI maturity level of less than 20%. They are aware of potential biases, fairness issues, privacy concerns and other ethical challenges in their AI development and deployment processes. However, there is no clear strategy, methodology, nor tools that allow them to address these issues swiftly or comprehensively.

The second stage of the AI maturity level is between 20%–50%. Organizations are mainly focused on implementing guidelines, policies, and practices to ensure fairness, transparency, and accountability in AI systems. Also, there is consideration on a variety of strategic activities such as conducting impact assessments, adopting ethical AI frameworks, and training AI developers on responsible AI practices. However, there may not be a tangible effort in place that aligns AI development with ethical principles.

The third stage means an organization has moved into the 50%–90% of their AI maturity. It's a result of a more deeply ingrained integration of responsible best practices throughout the entire organization and its culture, processes, and technologies. Therefore, AI governance setup is required to ensure and sustain the highest level of integrity at all levels.

In the final stage, organizations have successfully achieved an AI maturity level of over 90%. Responsible AI practices are no longer just a set of checkboxes. Organizations continue to contribute, support, and promote key AI principles across teams and tend to share best practices with others among their industry and relevant communities.

Perform a risk assessment of the AI solution/model in respect to the safe AI framework and provide a mitigation plan and recommendations.

Classify the model maturity and create the roadmap.

Implement tool-based monitoring and reporting for model maturity improvements.